Deepfake Pornography and Gender Violence

By Karla Morales

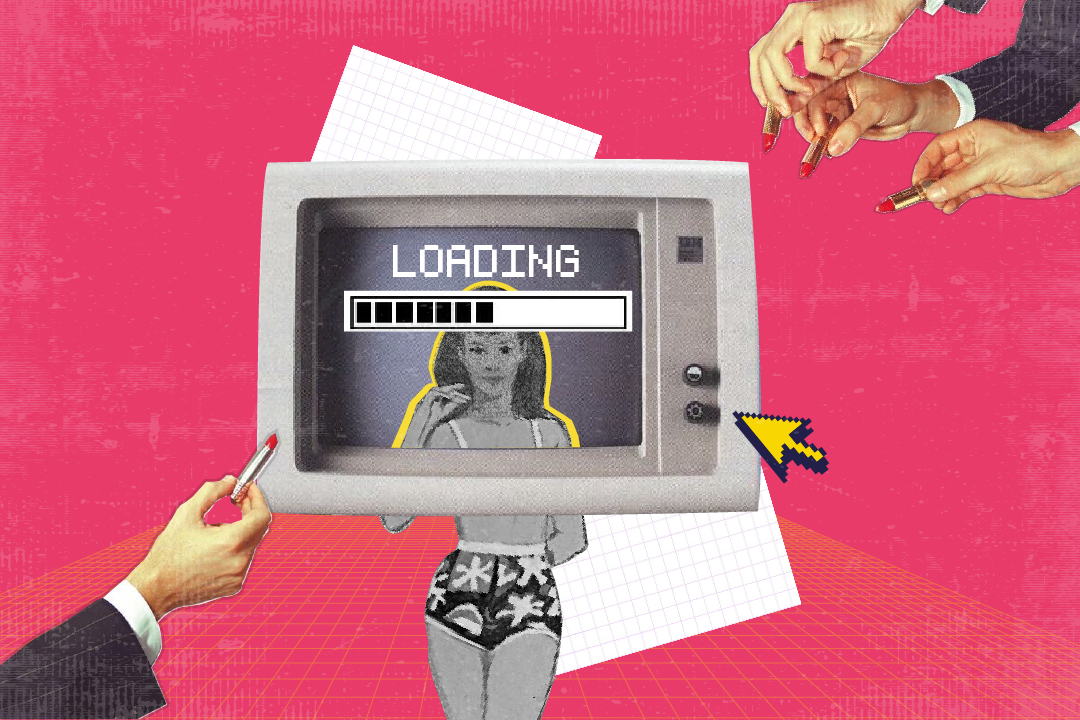

Image manipulation is not something new. However, the process to do so has changed greatly in recent times with the boom of Artificial Intelligence. Recent major advances and the increasing accessibility to this technology have made it easier to create strikingly realistic and deceptive sexual material. Therefore, there is a growing concern about the amount of non-consensual pornographic deepfake material circulating in virtual environments such as: websites, social networks and even messaging platforms. In that sense, deepfake pornography has become a form of digital violence against women that uses this technology to create fake pornographic videos of women without their consent for entertainment, mockery and/or revenge.

Therefore, in this article we will analyze the relationship that deepfake has with pornography, and how it has become a tool to violate women’s rights.

What is Deepfake?

Deepfake is a technique used to digitally alter images and place someone in a false scenario. This technique has been progressively improving, so these fake or false images are increasingly being perfected, posing as authentic. In that sense, a deepfake would be that image or audio that goes to the extremes of recreating what is real, when conventional perception cannot determine whether it is true or false (Cerdán, Padilla, 2019).

What makes these videos so credible is that the deepfake algorithms learn through repetition and this gives them the ability to explore and experiment with various possibilities such as: the creation of new, non-preexisting faces, identity theft, manipulation of attributes (skin color, eyes, mouth, nose, eye color, etc.), changes in expression, synchronization of lip movement when articulating words and/or reproduction of body movements.

Another important characteristic is that deepfakes use viralization mechanisms through the use of social networks and digital platforms so that its dissemination is massive. Therefore, they’ve had a great impact and have been placed in the discursive sphere of politics, pornography, entertainment, misinformation, and privacy.

Deepfakes create personalized hyperrealistic audiovisual content, because it is based on hypermedia and transmedia visual narratives. This means that deepfakes are feed on the content that users upload or share on digital platforms and social networks (Bañuelos, 2020). However, there are already cases where people photograph others without consent, carrying out activities of their daily lives and then use this content to create photographs and videos in deepfake generation applications.

It is clear that the use of these applications is becoming more accessible and therefore easier to use, which contributes to more production of hyperrealistic content and to the inability of consciousness to distinguish reality from fantasy. The key point of the issue is to establish to what extent the falsification violates human dignity and fundamental rights (Bañuelos, 2020).

The rise of deepfake porn and its victims

The term deepfake became popular in 2017, when a user calling himself Deepfakes used several photographs of famous actresses, which he cut out and then embedded on the bodies of several pornographic film actresses. This visual material was uploaded and viralized on Reddit. Consequently, in just two months, said user increased his number of subscribers to 15,000, in addition to extending the use of the word deepfake to refer to videos created using AI.

In that sense, people who use these applications have been especially interested in the creation of modified pornographic material. Real intimate images are not disseminated in deepfakes, but they are created or designed so that the intimacy of their protagonists seems credible. (Cerdán, Padilla, 2020). Therefore, not only celebrities have been the focus of these ill-intentioned products, but people of all kinds as well.

According to the ‘State of deepfakes 2023’ study by Home Security Heroes, deepfake pornography represents 98% of all deepfake videos online. In addition to the fact that 99% of the people targeted by deepfake pornography are women. On the other hand, its ease of use and accessibility allows the creation of hyper-realistic content instantly. Consequently, the study also points out that nowadays, to create a 60-second deepfake pornographic video, only a clear photo of the victim’s face is needed and it can be done in just 25 minutes, without any financial cost.

It is important to highlight that the majority of this material is produced without the consent of the victims and since they are usually erotic or pornographic scenes, they can attack the private life and dignity of the person for the purposes of discredit, ridicule, and/or profit. In addition to pointing out the uses of these synthetic representations, it is convenient to qualify their subjective scope. That is, defining who are the active subjects who use them and who are the passive subjects on whom manipulation is exercised. (Simó, 2023).

Understanding that traditional pornography is constructed and designed from the objectification and hypersexualization of women’s bodies, pornographic deepfake directly impacts and affects them, turning these pornographic montages into one of the main forms of digital violence towards women and in an emerging problem for which there is no legal regulation.

Deepfakes: another form of digital violence against women

Understanding that in the offline world women historically face various forms of violence - governed by a cis-heteropatriarchal system embedded in the social, economic, political and cultural spheres - it becomes difficult to assume that this is not going to happen in the online world, although it is ruled by other dynamics of socialization, the problem prevails and sometimes it even worsens.

According to the Association for Progressive Communications, digital gender violence is understood as the acts of gender violence committed, instigated or aggravated in part or entirely by the use of ITC, social media platforms and email; that cause psychological and emotional harm, reinforce prejudice, damage reputation, cause economic loss and pose barriers to participation in public life and can lead to forms of sexual and other forms of physical violence (APC, 2015).

It is evident that with the progress of communication technologies, violence against women has taken new forms and has adapted to different contexts. In the specific case of deepfakes, technology has been used to discredit or blackmail women in an attempt to silence or undermine them. Generally, there is no consent to generate this type of content and, although the bodies are not theirs in the montages, the faces of the victims are. This causes deep damage to their image in which a woman’s identity and behavior can be manipulated with certain ease and impunity derived from the difficulty of identifying falsehood and having to combat the uncertainty and confusion that these synthetic productions generate ( Simó, 2023).

Therefore, deepfakes are an emerging problem that has reached into the most intimate places of women’s lives, since the people who commonly create and disseminate this content are classmates, friends, brothers, boyfriends or ex-partners. For example, students from a school in Quito used photographs of their classmates to create around 700 deepfake sexual videos, where at least 20 students were affected.

Also, the combination of deepfakes and gender violence can be beneficial for abusive men who, as a method of revenge, use photographs shared on social networks to control, intimidate, isolate and shame their victims; or in the case of female politicians, journalists or activists, use this tool to portray them as objects of consumption and public scrutiny to focus media attention on their image and bodies, with the aim of damaging their public image and delegitimizing their leadership abilities, turning them target of hate on social media.

The problem with deepfakes is not only that they are perfectly made to be credible, but that people want to believe them because they match their ideological bias and they share them without confirming their veracity, because they like them and want to think that they are true.

This form of digital violence against women has a direct impact on their mental health, causing consequences such as: depression, anxiety, self-harm and, as has already been seen in some cases, suicide. Likewise, it violates several rights such as: data protection, privacy, honor and self-image, access to justice, freedom of expression, etc. In this sense, the violation of data protection is particularly relevant because there is a dissemination of information that, although it is essentially false, uses real personal data, which is aggravated when these deepfake creations are shared or distributed to third parties.

It is important to keep in mind that the accoutability for this problem not only falls on the creators of this content, but also on technology companies, the media and Internet platforms. Therefore, it is necessary to establish a legal framework with a gender perspective that allows to identify violence against girls and women in digital environments and to establish norms that ensure its eradication and protection of girls and women in the digital sphere.

Bibliography:

- Bañuelos, J. (2020). Deepfake: la imagen en tiempos de la posverdad. Revista Panamericana de Comunicación, vol. 1, núm. 2, pp. 51-61. Universidad Panamericana, Campus Ciudad de México

- Cerdán, V.; Padilla, G. (2019). Historia del fake audiovisual: deepfake y la mujer en un imaginario falsificado y perverso. Hist. comun. Soc. 24 (2), pp. 505-520.

- Simó, E. (2023). Retos jurídicos derivados de la Inteligencia Artificial Generativa. Deepfakes y violencia contra las mujeres como supuesto de hecho. Universitat de València - Universidad de Buenos Aires. •

- https://www.rtve.es/noticias/20231103/deepfake-pornograficos-aumentan-464-mujeres-principales-victimas/2459989.shtml

- https://tcmujer.org/dct/tmp_adjuntos/noEn/000/000/Mediciones%20VDG%20en%20America%20Latina%20y%20el%20Caribe.pdf